LayerNorm is annoying for mechanistic interpretability research (“[…] reason #78 for why interpretability researchers hate LayerNorm” – Anthropic, 2023).

Here’s a Hugging Face link to a GPT2-small model without any LayerNorm.

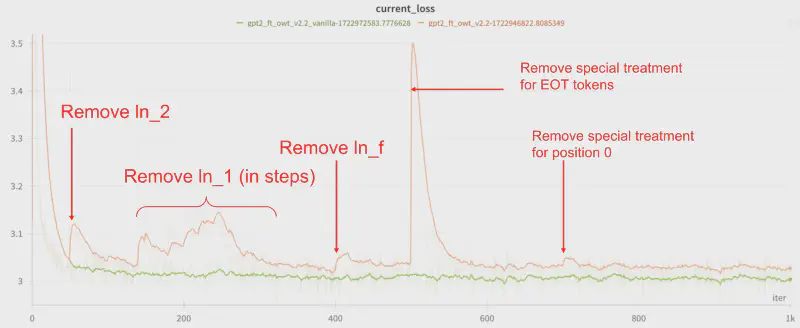

The final model is only slightly worse than a GPT2 with LayerNorm.

| Dataset | Original GPT2 | Fine-tuned GPT2 with LayerNorm | Fine-tuned GPT2 without LayerNorm |

|---|---|---|---|

| OpenWebText (ce_loss) | 3.095 | 2.989 | 3.014 (+0.025) |

| ThePile (ce_loss) | 2.856 | 2.880 | 2.926 (+0.046) |

| HellaSwag (accuracy) | 29.56% | 29.82% | 29.54% |

For more details, see my paper or AlignmentForum post.